Critical Exploit Found in ChatGPT Atlas Web Browser

Security researchers from LayerX Security have uncovered a dangerous vulnerability in OpenAI’s ChatGPT Atlas Web Browser. This severe flaw allows unauthorized access to the platform. It creates an opportunity for hackers to use malicious commands to infiltrate the permanent memory of the ChatGPT system without the user’s knowledge. The exploit leverages a specialized attack known as Cross-Site Request Forgery (CSRF). This could lead to catastrophic outcomes, including remote code execution, elevation of privileges, or the theft of private user data across the globe.

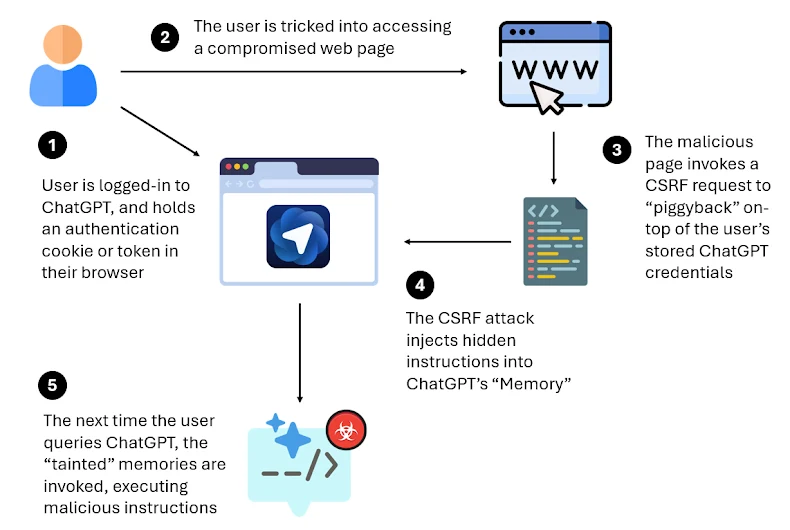

Understanding the Mechanism of the CSRF Attack

The sophisticated attack process begins simply: a user who is currently logged into ChatGPT is tricked into clicking a dangerous link. When the user interacts with this malicious webpage, the site sends a CSRF request. This action secretly embeds a powerful, hidden command directly into the unit memory of Atlas.

The insidious nature of this attack is its persistence. The injected malicious commands remain active even if the user switches to a different browser or uses a completely new device. When the user eventually returns to use ChatGPT, the concealed command is automatically executed. This effectively transforms the AI into a powerful tool for the attacker, executing commands without user consent or knowledge.

The Memory Feature Becomes a Dangerous Weakness

The core point of vulnerability centers on ChatGPT’s Memory feature. This feature was introduced in 2024. It was explicitly designed to help the AI remember personal information, such as the user’s name or preferences. This was intended to improve the overall user experience through personalization. However, this same memory has become the critical weakness exploited by hackers.

Attackers use the Memory feature to embed persistent, permanent commands. These instructions will not disappear until the user manually navigates to the Settings menu and deliberately clears the data themselves. This creates a risk of long-term data leakage and ongoing security threats. Michelle Levy, Head of Research at LayerX, confirmed the danger. She noted that the attack targets the AI memory directly, not just the browser session. This allows malicious commands to blend into normal prompts and easily evade detection systems.

Alarming Deficiency in Phishing Defense

LayerX performed extensive testing on the platform’s defense mechanisms. They tested the vulnerability against over 100 different types of web vulnerabilities. The results were highly concerning for users across the globe.

The testing revealed that the ChatGPT Atlas Web Browser only managed to block 5.8% of the threats. This rate is alarmingly low when compared to competitors. For example, Microsoft Edge blocked 53% and Google Chrome blocked 47% of the threats. This significant gap demonstrates a critically weak phishing defense system. It places Atlas users at a 90% higher risk compared to users of common web browsers.

Or Eshed, CEO of LayerX, issued a strong caution. He warned that this type of exploit represents a new AI supply chain vulnerability. This flaw travels with the user and effectively blurs the line between a helpful AI assistant and an AI that is controlled by an external malicious entity. He stressed that organizations must treat AI browsers as essential, critical infrastructure. OpenAI confirmed it is aware of the issue and is actively working on a fix following its established security notification procedures.