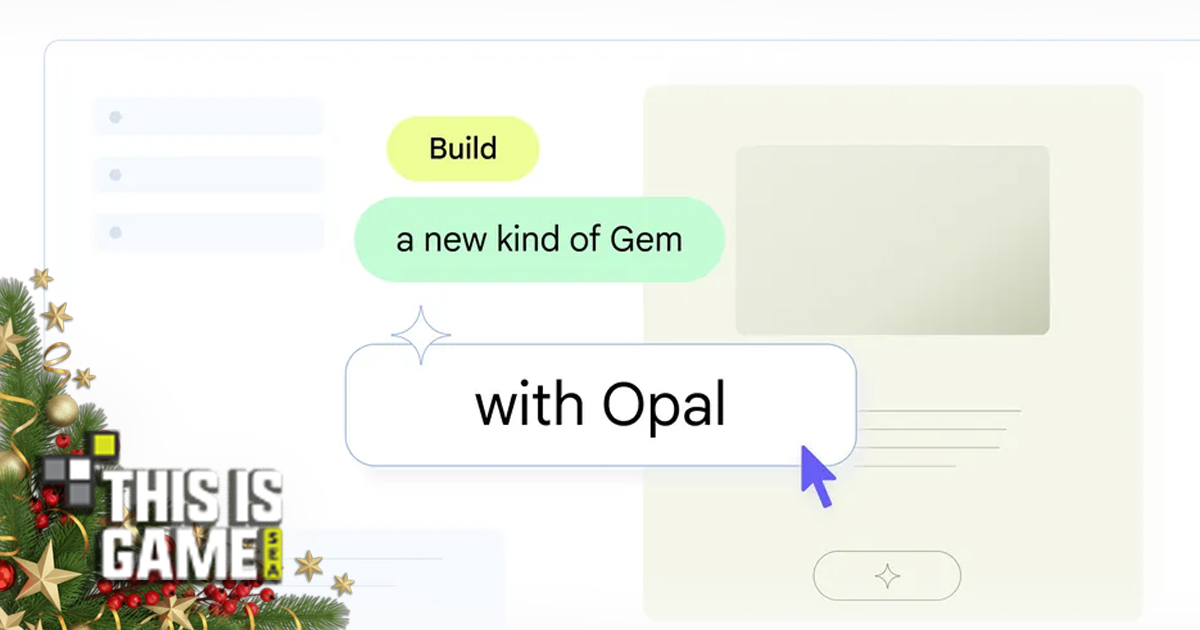

Google announces the introduction of Opal, a new application builder that focuses on using natural language senses or commands (Vibe-coding), directly into the web version of Gemini. These apps are called Gems, which are like specialized AI assistants that are tailored to specific tasks.

As for Gems, it has been launched since 2024 as an expert AI in areas such as learning coaches or career advisors, but the arrival of Opal will elevate Gems into a more complex mini-app. Users simply explain what they want in simple colloquial language, such as creating an app to help summarize YouTube clips and write social posts. The Opal system instructs the Gemini models to work together to create an app structure on the fly.

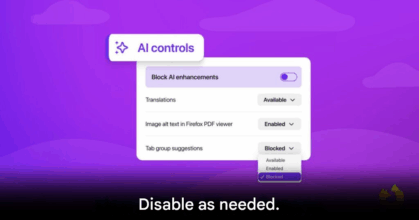

The integration of Opal with Gemini allows users to access the tool through the Gems manager page on the Gemini web. It allows us to see how AI works step-by-step and can move, arrange, or link parts to our liking without touching a single line of code.

In addition to simplicity, Google has also added intelligence to the Visual Editor with a new perspective that can transform our prompts into easy-to-understand workflow lists. It allows ordinary users with no technical background to see the overview and structure of the app more clearly. Lowers barriers for those who are just starting out to have their own AI app to save time in everyday life.

For those who want to deepen their functionality, Google has also made it possible to use the Advanced Editor through the opal.google.com website, where the mini-apps we’ve created can not only help you perform repetitive tasks with precision, but can also be reused or shared with others. It is a transition from simple Q&A chats to creating permanent tools that are practical.

This update reflects the trend of vibe-coding in 2025, which is shifting the role of humans from coders to designers and operators (Orchestrators) instead.

Origin: Techcrunch